Towards Recommender Engineering

Reproducible Research and Algorithm Personalities

Michael Ekstrand

GroupLens Research

Dept. of Computer Science and Engineering

University of Minnesota

GroupLens Research

Dept. of Computer Science and Engineering

University of Minnesota

Michael Ekstrand

B.S. CprE, Iowa State University (2007)

Finishing Ph.D CS, University of Minnesota (2014)

GroupLens Research (HCI & social computing)

Advised by Joseph Konstan and John Riedl

http://elehack.net

Research Overview

Helping users find, filter, navigate, and understand large-scale information spaces.

Topics

- Recommender systems

- Help search

- Wiki history browsing

- HPC network topology visualization

Methods

- Offline data analysis & experiments

- User studies

- System building

Current Research Objective

Recommender research should be

so we can engineer information solutions and understand recommender-assisted decision-making

Recent & Ongoing Work

Infrastructure

- LensKit toolkit

- Reproducible research

- Reproduce algorithms

- Deploy results

Experiments

- Offline w/ public data

- User studies

Overview

Overview

Recommender Systems

Recommender Systems

recommending items to users

Recommender Research

- Defined more by application than technology

- Existed as early as 1969 (Rich's Grundy)

- Modern form introduced in 1994 (Resnick et al.)

RecSys conference in 8th year, has ~300 attendees

- Mix of HCI, ML/IR, business

- Strong industrial presence

Common Approaches

Non-personalized

Content-based [Balabanović, 1997; others]

Collaborative filtering

- User-based [Resnick et al., 1994]

- Item-based [Sarwar et al., 2001]

- Matrix factorization [Sarwar et al., 2000; Funk, 2006]

Hybrid approaches [Burke, 2002]

Learning to Rank

Evaluating Recommenders

Many measurements:

- ML/IR-style data set experiments

- User studies

A/B testing

- Engagement metrics

- Business metrics

Common R&D Practice

- Develop recommender tech (algorithm, UI, etc.)

- Test on particular data/use case

- See if it is better than baseline

- Publish or deploy

Learned: is new tech better for target application?

Not learned: for what applications is new tech better? why?

Algorithms are Different

- Algorithms perform differently

- No reason to believe one size fits all

- Quantitatively similar algorithms can have qualitatively different results [McNee, 2006]

- Different algorithms make different errors [Ekstrand, 2012]

- Opportunity to tailor system to task

- Doesn't even count other system differences!

Building a Boat Shed

An Analogy

Current practice:

- build shed

- A/B test: roof with 2 different materials

- measure winter survival

- buy a new boat

Building a Boat Shed

An Analogy

Better practice

- compute span, snow load, etc.

- determine adequate roofing structure

- build boat shed

- enjoy the boat next summer

Recommender Engineering

Recommender engineering is

- designing and building a recommender system

- for a particular application

- from well-understood principles of algorithm behavior, application needs, and domain properties.

What Do We Need?

To enable recommender engineering, we to understand:

- Algorithm behaviors and characteristics

- Relevant properties of domains, use cases, etc. (applications)

- How these interactions affect suitability

All this needs to be reproduced, validated, and generalizable.

My work

- LensKit

enables reproducible research on wide variety of algorithms

- Offline experiments

- validate LensKit

- demonstrate algorithm differences

improve engineering

- User study

- obtain user judgements of algorithm differences

currently ongoing

Overview

LensKit is an open-source toolkit for building, researching, and studying recommender systems.

LensKit

- build

- prototype and study recommender applications

- research algorithms with users

deploy research results in live systems

- research

- reproduce and validate results

- new experiments with old algorithms

- make research easier

provide good baselines

- study

learn from production-grade implementations

LensKit Features

- Common APIs for recommender use cases

- Infrastructure for building and using algorithms

- Implementations of well-known algorithms

- Evaluation framework for offline data analysis

- Tools for working with algorithms, models, and configurations

LensKit Project

- Started in 2010

- Supported by undergraduate & graduate students, staff, etc.

- ~43K lines of Java (with some Groovy)

- Open source/free software under LGPLv2+

- Developed in public (GitHub, Travis CI)

- Competitors: Mahout, MyMediaLite, others

Design Challenges

We want:

- Flexibility (reconfigure to match and try many algorithm setups)

- Performance (so it works on real data with reasonable resources)

- Readability (so it can be understood)

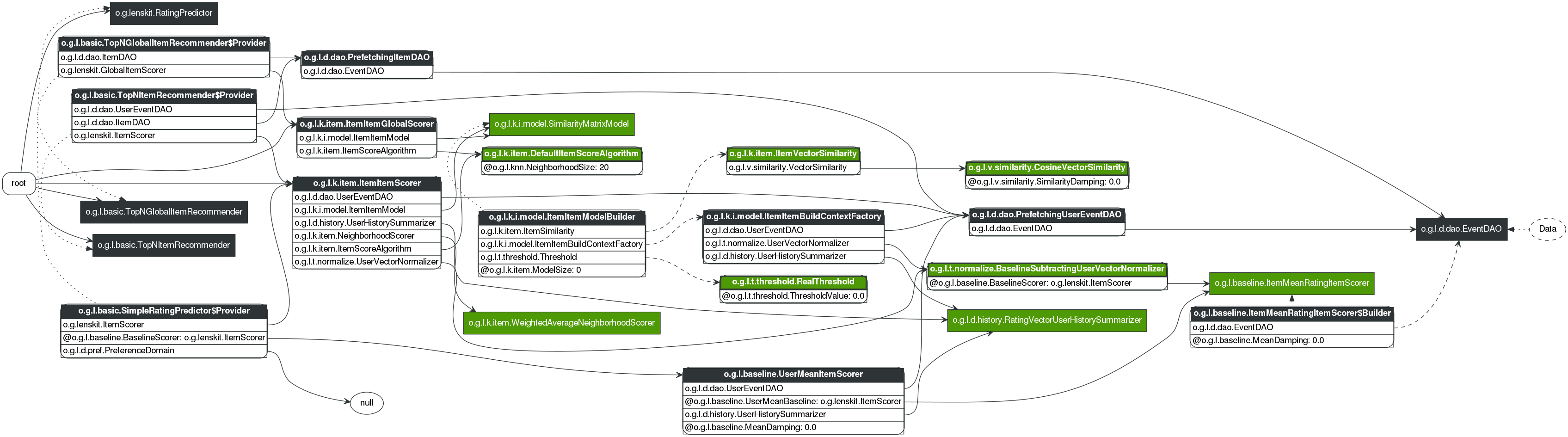

Component Architecture

For flexibility and ease-of-use, we use:

- Modular algorithm designs

Automatic tooling for recommender components

- Practical configuration

- Efficient experimentation

- Easy web and database integration

Modular Algorithms

- Break algorithms into separate components where feasible

- Neighborhood finders

- Similarity functions

- Normalizers

- Anything else!

- Full recommender consists of many interoperating components

- Components specified with interfaces, implementations can be swapped out

Dependency Injection

Components receive their dependencies from whatever creates them.

public UserUserCF() {

similarity = new CosineSimilarity();

}public UserUserCF(SimilarityFunction sim) {

similarity = sim;

}A Little Problem

How do we instantiate this mess?

Dependency Injectors

- Extract dependencies from class definitions

- Instantiate required components automatically

Several of them in wide use:

- Spring

- Guice

- PicoContainer

DI Configuration

// use item-item CF to score items

bind ItemScorer to ItemItemScorer

// subtract baseline score from user ratings

bind UserVectorNormalizer

to BaselineSubtractingUserVectorNormalizer

// use user-item mean rating as baseline

bind (BaselineScorer, ItemScorer) to UserMeanItemScorer

bind (UserMeanBaseline, ItemScorer)

to ItemMeanRatingItemScorer

// the rest configured with defaultsGrapht

Existing containers have some limitations:

- Difficult to configure composed configurations

- Limited static analysis support

So we built Grapht:

- Easy context-sensitive configuration

- Pre-compute full dependency graph

Composable Components

Basic DI binds interfaces to implementations

What if same interface appears multiple times

- and you want different implementations?

- or different configs of same implementation?

Naturally arises in our domain

- wrapper components

- hybrid recommenders

Difficult to configure in existing systems

Context-Sensitive Policy

Context-Sensitive Policy

within (UserVectorNormalizer) {

bind (BaselineScorer, ItemScorer) to UserMeanItemScorer

}UserMeanItemScorerused when satisfying a dependency of aUserVectorNormalizer- Other baseline scorer dependencies unaffected

- Allows arbitrarily composable components

Context-Sensitive Policy

Surprisingly useful

- We created it to allow for configuring hybrids

- But it's a better solution for many other problems

Strictly more expressive than qualifiers

Static Dependency Graph

We compute the object graph before instantiating objects.

This allows us to

- analyze & debug configurations without building models

- automatically separate pre-built and runtime components

- reuse shared components in evaluator

Grapht Summary

To meet LensKit's needs, we created a new dependency injector that:

- Is built on a well-defined mathematical foundation (graph manipulation)

- Allows static analysis of component dependencies

- Enables arbitrarily composable components to be easily configured

Initial Results

RecSys 2011

- Reproduced comparisons & tunings of seminal algorithms

- Revisited previous best practices

- Discovered some new best practices

Asked: does rank-based evaluation affect design decisions?

- Not for simple choices

When Recommenders Fail

Short paper, RecSys 2012

Q1: Which algorithm has most successes (ε ≤ 0.5)?

Qn + 1: Which has most successes where 1…n failed?

| Algorithm | # Good | %Good | Cum. % Good |

|---|---|---|---|

| ItemItem | 1044371 | 52.23 | 52.23 |

| UserUser | 166008 | 8.30 | 60.53 |

| Lucene | 90018 | 4.50 | 65.03 |

| FunkSVD | 53313 | 2.67 | 67.70 |

| Mean | 21617 | 1.08 | 68.78 |

| Unexplained | 624291 | 31.22 | 100.00 |

Parameter Tuning

- Many algorithms have tunable parameters

- Usually tuned by grid search

- Very expensive

- Combinations often skipped

- Makes engineering search space huge

- Goal: reduce space and automate search

- Some results, still working on it

Outcomes of LensKit

- Public, open-source software now at version 2.0

- Basis for Coursera MOOC on recommender systems (~1000 students)

- Reproduced and extended various research results (RecSys 2011)

- New research results

- Recommender errors (RecSys 2012)

- Algorthmic analysis for interface research (Kluver et al., 2012; Nguyen et al., 2013)

- Implementation behind MovieLens and BookLens

- Used for CHI/CSCW Confer system

Overview

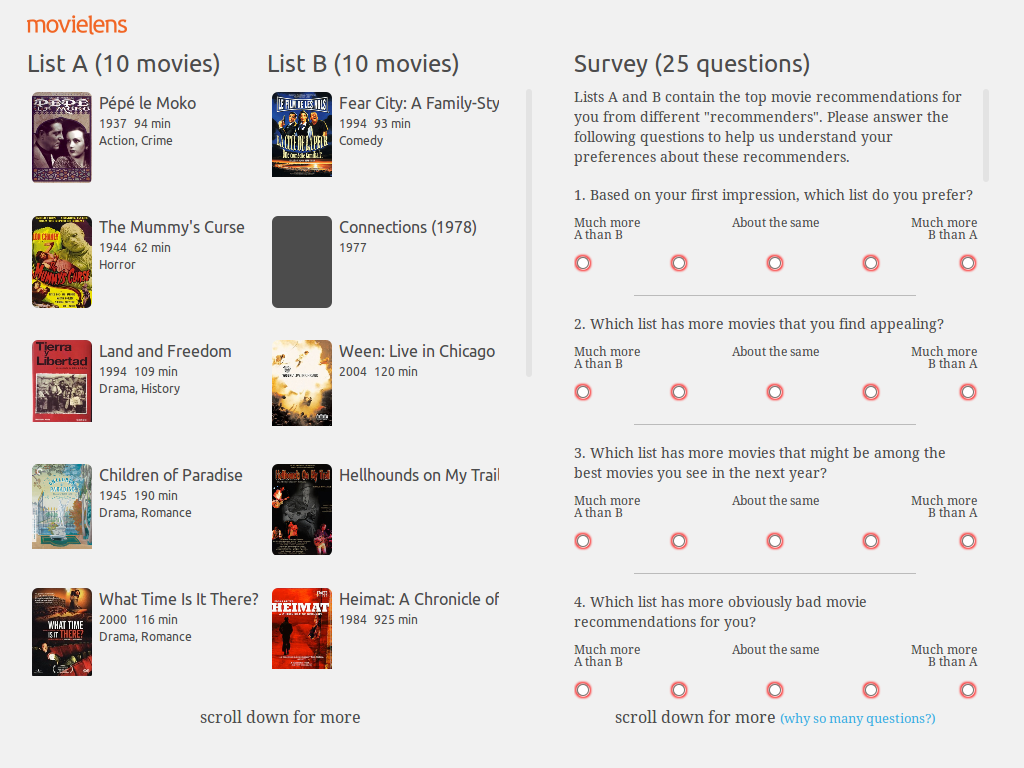

Context: MovieLens

- Movie recommendation service & community

- 2500–3000 unique users/month

- Extensive tagging features

User Study Goal

Advance recommender engineering by studying how users perceive the movie recommendations from different algorithms to differ.

Experiment Outcomes

- RQ1

Do users perceive movie lists from different algorithms to be different?

- RQ2

- How do they perceive these lists to be different?

Learn context-relevant properties for algorithms.

- RQ3

What characteristics predict their preference for one list over the other?

- Side Effect

Collect data for calibrating offline metrics.

Algorithms

Three well-known algorithms for recommendation:

- User-user CF

- Item-item CF

- Biased matrix factorization (FunkSVD)

Each user assigned 2 algorithms

Predictions

Predicted ratings influence list perception.

To control, 3 prediction treatments:

- Standard raw predictions (0.5–5 stars)

- No predictions

- Normalized predictions

Each user assigned 1 condition

Survey Design

Initial ‘which do you like better?’

25 questions

- ‘Which list has more movies that you find appealing?’

- ‘much more A than B’ to ‘much more B than A’

Final ‘which do you like better?’

Analysis features

- joint evaluation

- users compare 2 lists

- judgment-making different from separate eval

enables more subtle distinctions

- factor analysis

- 25 questions measure 5 factors

- more robust than single questions

structural equation model tests relationships

Structural Equation Model

List Properties

Secondary goal: identify measurable recommendation list properties that predict user judgements.

- Diversity

- Popularity

- Others

Outcomes:

- Understanding of features underlying user judgements

- Metrics for further offline analysis

Expected Contributions

User-perceived differences between 3 algorithms

Dimensions along which users perceive them to be different (5 factors)

Data set of user judgments of recommender differences

- feed back in to offline analysis

- calibrate offline metrics

Currently running with almost 300 completions.

Overview

Toward Recommender Engineering

The road to recommender engineering is long

- Extensive, validated research on algorithm characteristics

- Both offline and user studies

Carry on with my students and collaborators

- More user studies

- Informing offline analysis with user results

- Integrated into applications

Skills and Techniques

My research involves several major efforts:

- System building (and distribution)

- Offline data analysis

- User studies

- Interdisciplinary collaboration

Applications

This work

Future

Additional Directions

Interactive recommendation

- Recommend candidates, user accepts/rejects

- Recommender and user ‘work together’

- Recommenders as tools, not just agents

- Multi-algorithm systems

- Diversity and decision support

Discovery for decentralized communiation

Funding

NSF funded (IIS, HCC)

- GroupLens has had good success with NSF funding for recommender research

- Cyber infrastructure funding may be available

Medical could get NIH funding

Thank you!

Also thanks to

- GroupLens Research for amazing support

- NSF for funding research

- The Noun Project for great icons

- ‘Document’ by Rob Gill

- ‘Test Tube’ by Olivier Guin

- ‘Experiment’ by Icons Pusher

- ‘Future’ by Megan Sheehan